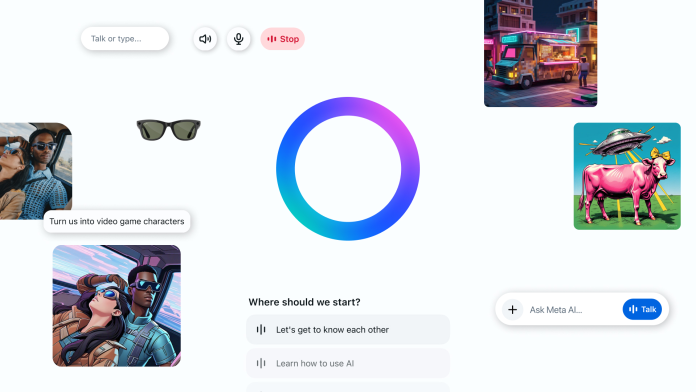

Meta has introduced a new standalone Meta AI app powered by its latest large language model, Llama 4, marking a significant step toward more personal and intuitive AI experiences. The app offers users a voice-first interface designed to make conversations with AI feel more natural, helpful, and socially connected.

This launch expands on Meta AI’s existing presence across WhatsApp, Instagram, Facebook, and Messenger. Now, users can engage with Meta AI in a dedicated space designed around voice conversations, with features that support multitasking and seamless interaction.

While voice interaction with AI is not new, Meta has significantly enhanced the experience by introducing full-duplex speech technology. This demo feature, available in the US, Canada, Australia, and New Zealand, enables the AI to speak in a conversational style rather than simply reading text aloud. The result is a more fluid and lifelike dialogue that responds in real time.

Users can toggle this feature on or off and will see a visible icon whenever the microphone is in use. A new “Ready to talk” setting also allows users to keep voice mode active by default, making it easier to converse with Meta AI while on the move or juggling other tasks.

Meta AI is designed to get to know its users over time. It adapts its responses based on context and information the user chooses to share, such as favorite activities, interests, or content liked across Meta platforms. Personalized responses are now available in the US and Canada, with even more tailored interactions for users who connect both Facebook and Instagram through Meta’s Accounts Center.

This personalized approach is central to Meta’s vision of an AI that acts not just as a tool, but as a companion that understands and anticipates individual needs.

The new app supports Meta AI’s image generation and editing features through both text and voice input. A Discover feed encourages users to explore and share creative prompts, remix ideas from others, and learn new ways to use the AI. Importantly, no content is shared publicly unless the user chooses to post it.

The app is also being integrated with the Meta View companion app used by Ray-Ban Meta smart glasses. In supported countries, users can start a conversation using their glasses and continue it in the app or web interface. Although this integration currently flows in one direction—from glasses to app or web—it sets the stage for more fluid cross-device experiences.

Existing Meta View users will see their device settings and media migrate automatically to the updated app’s new Devices tab.

Meta has upgraded the web experience to match the capabilities of the new mobile app. Voice interaction and the Discover feed are now part of the web interface, which has also been optimized for larger screens and desktop use. Enhancements to the image generation tool include new presets for style, mood, lighting, and color.

In select countries, Meta is testing a document editor that allows users to generate content with text and images, then export it as a PDF. A separate test is underway for importing documents into Meta AI for analysis, further expanding its utility.

With the launch of this app, Meta is blending its years of experience in personalization with the latest advancements in generative AI. Powered by Llama 4, Meta AI is more conversational, more relevant, and more integrated than ever before.

Whether chatting with friends on Messenger, scrolling Instagram, wearing smart glasses, or working at a desktop, users will find Meta AI ready to assist. This release reflects Meta’s broader ambition: to create an AI that not only understands what users want but adapts to how they live.

Discover more from SNAP TASTE

Subscribe to get the latest posts sent to your email.