As artificial intelligence systems move from perception-driven automation to decision-making under uncertainty, a new constraint has emerged. The limiting factor is no longer the ability to detect objects or follow predefined policies, but the capacity to reason through rare, ambiguous, and causally complex scenarios. For autonomous vehicles and intelligent machines operating in open-world environments, these long-tail situations represent the primary barrier to scalable deployment.

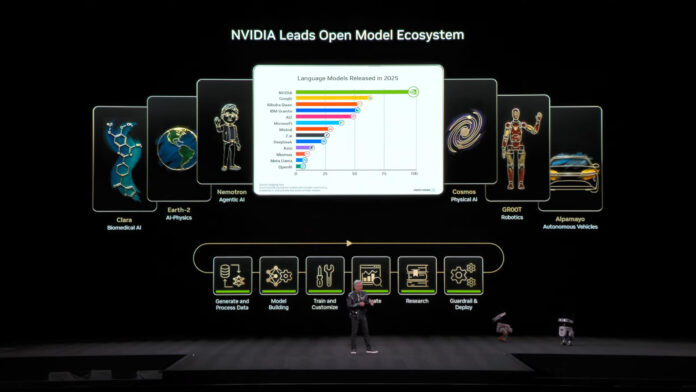

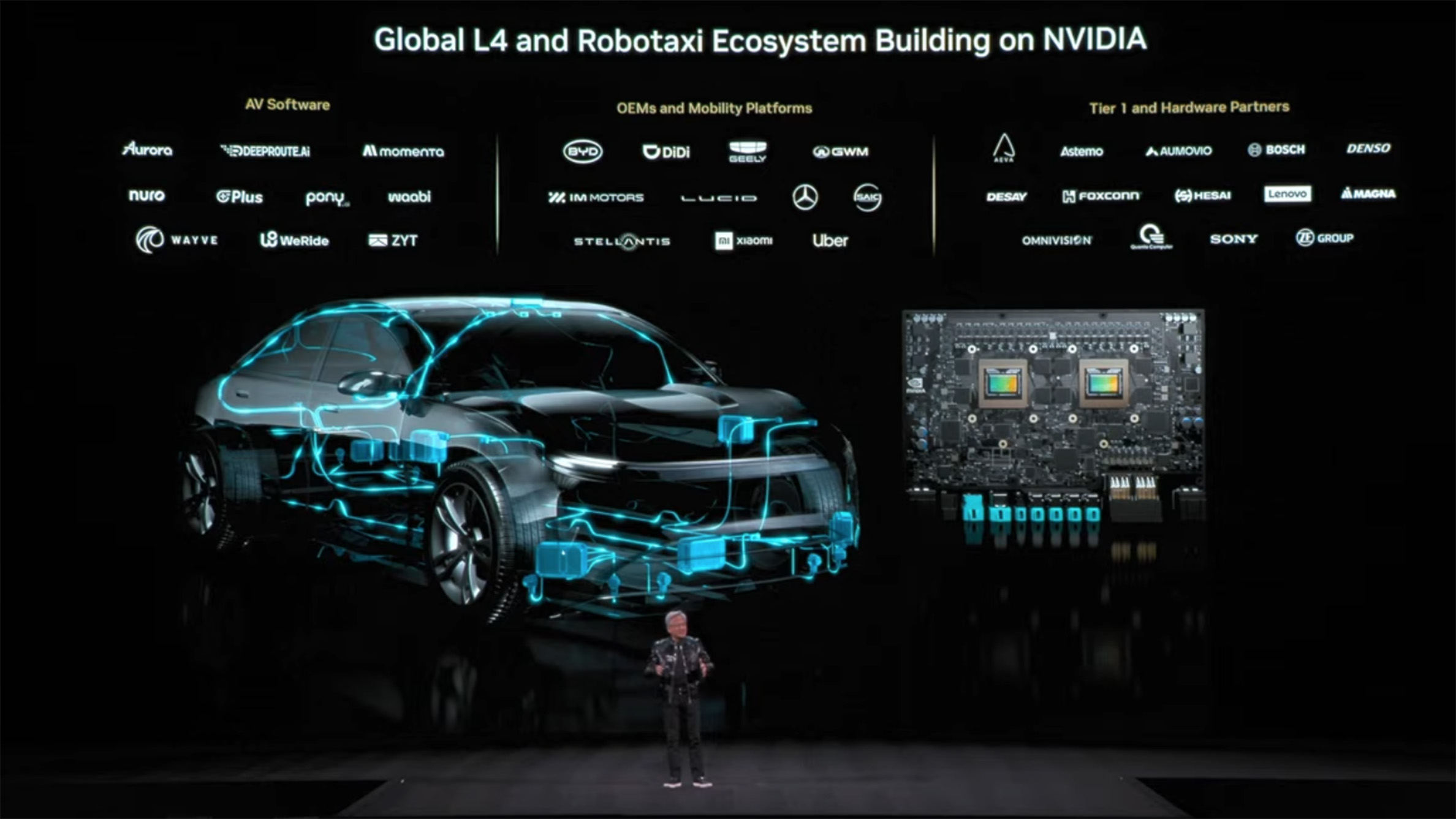

At CES 2026, NVIDIA introduced the NVIDIA Alpamayo family, an open ecosystem of reasoning-based AI models, simulation frameworks, and datasets designed to address this constraint directly. The announcement positions NVIDIA as the first company to release an open reasoning vision language action model tailored to long-tail autonomous driving challenges, while extending the same architectural principles to robotics and broader physical AI systems.

The Alpamayo initiative reflects a broader shift in AI system design. As physical AI applications scale, success increasingly depends on operational resilience, explainability, and the ability to generalize beyond training distributions. These characteristics are not incidental features but core system properties that must be designed into models, data pipelines, and development workflows.

The Long-Tail Problem in Autonomous Systems

Autonomous vehicles are expected to operate safely across a near-infinite combination of road geometries, weather conditions, traffic behaviors, and human interactions. While perception accuracy has improved steadily, rare and complex scenarios continue to dominate risk profiles. These include unusual human behavior, unexpected obstacles, and edge cases that fall outside statistical norms.

Traditional autonomous vehicle architectures separate perception, prediction, and planning into modular components. While this approach supports incremental improvements, it often struggles to scale when vehicles encounter novel situations. End-to-end learning architectures have reduced system complexity, but they can fail unpredictably when inputs diverge from training data.

Research increasingly suggests that overcoming these limitations requires models capable of explicit reasoning about cause and effect. Rather than reacting to patterns alone, systems must be able to evaluate alternatives, anticipate outcomes, and justify decisions. This capability is particularly important for safety validation, regulatory confidence, and public trust.

Alpamayo and Reasoning-Based Architecture

The NVIDIA Alpamayo family introduces chain-of-thought reasoning into physical AI systems through vision language action models. These models are designed to process video inputs, reason step by step about environmental dynamics, and generate both actions and explicit reasoning traces. This approach brings humanlike decision-making structures into autonomous driving and robotics, improving both performance and interpretability.

Crucially, Alpamayo models are not intended to run directly in vehicles or robots. Instead, they function as large-scale teacher models. Developers can fine-tune, distill, and adapt them into smaller runtime models suitable for deployment. This design reflects a pragmatic understanding of production constraints while preserving access to advanced reasoning during development.

The Alpamayo models are underpinned by the NVIDIA Halos safety system, which provides architectural support for safe deployment. By integrating reasoning with safety validation, NVIDIA aims to address not only technical performance but also governance and accountability requirements that increasingly shape autonomous system adoption.

An Open Development Loop for Physical AI

The Alpamayo family integrates three foundational components into a cohesive open ecosystem.

The first component is Alpamayo 1, the industry’s first open chain-of-thought reasoning vision language action model designed specifically for autonomous vehicle research. Alpamayo 1 features a ten billion parameter architecture and processes video input to generate vehicle trajectories alongside reasoning traces that explain each decision. Open model weights and open-source inferencing scripts are provided, enabling developers to build tooling such as reasoning-based evaluators and automated labeling systems. Future iterations are expected to scale parameter counts, expand reasoning depth, and support commercial deployment scenarios.

The second component is AlpaSim, a fully open-source end-to-end simulation framework for high-fidelity autonomous vehicle development. AlpaSim provides realistic sensor modeling, configurable traffic dynamics, and scalable closed-loop testing environments. This enables rapid policy iteration and systematic validation of reasoning-based behaviors before real-world deployment.

The third component is the Physical AI Open Datasets. This dataset includes more than 1,700 hours of driving data collected across diverse geographies and conditions, with a particular emphasis on rare and complex edge cases. The dataset is designed to support training and evaluation of reasoning architectures that must generalize beyond common scenarios.

Together, these components form a self-reinforcing development loop. Models generate hypotheses, simulation environments test behaviors at scale, and datasets ground learning in real-world complexity. For AI and computer companies, this loop reduces development risk while improving robustness and reproducibility.

Industry and Research Alignment

The Alpamayo initiative has attracted interest from mobility leaders such as Lucid, Jaguar Land Rover, and Uber, as well as from the autonomous driving research community including Berkeley DeepDrive. These organizations view reasoning-based architectures as a prerequisite for advancing level four autonomy in a responsible and scalable manner.

From an industry perspective, open and transparent development is increasingly aligned with stakeholder expectations. Regulators, partners, and customers are demanding systems that can explain decisions and demonstrate consistent behavior under uncertainty. Alpamayo’s open design supports this requirement by enabling shared evaluation frameworks and reproducible research.

Extending Reasoning Beyond Vehicles

While Alpamayo focuses on autonomous driving, the underlying architectural principles extend across physical AI domains. NVIDIA is applying the same reasoning-centric approach to robotics, industrial automation, healthcare devices, and edge AI systems.

At CES 2026, NVIDIA also announced new open models and frameworks for robot learning and reasoning. These include NVIDIA Cosmos Transfer 2.5 and Cosmos Predict 2.5 for world modeling and synthetic data generation, Cosmos Reason 2 for reasoning-based vision language understanding, and Isaac GR00T N1.6, a reasoning vision language action model designed for humanoid robots. These models allow developers to bypass resource-intensive pretraining and focus on task-specific adaptation.

Simulation and evaluation are supported by Isaac Lab Arena, an open-source framework for large-scale robot policy benchmarking, and OSMO, a cloud-native orchestration framework that unifies data generation, training, and testing across heterogeneous compute environments. These tools address workflow fragmentation, a persistent challenge in robotics and physical AI development.

Edge Deployment and Compute Strategy

For deployment at the edge, NVIDIA introduced the Jetson T4000 module, powered by the Blackwell architecture. The module delivers four times greater energy efficiency and AI compute compared with the previous generation, with 1,200 FP4 teraflops and 64 gigabytes of memory in a configurable 70-watt envelope. This provides a cost-effective upgrade path for existing Jetson Orin deployments.

NVIDIA IGX Thor extends this capability to industrial environments, combining high-performance AI computing with enterprise software support and functional safety. These platforms enable reasoning-capable systems to operate reliably in energy-constrained and safety-critical settings.

Strategic Takeaway for AI and Computer Companies

The Alpamayo announcement signals a structural shift in physical AI development. Competitive advantage is increasingly determined by the ability to reason, generalize, and explain decisions under uncertainty, not simply by perception accuracy or compute scale.

For AI and computer companies, Alpamayo offers a blueprint for building systems that are resilient, interpretable, and aligned with long-term deployment realities. By integrating open models, simulation, and data into a unified development loop, NVIDIA is reducing the friction between research and production.

The broader implication is clear. As physical AI systems move closer to widespread deployment, reasoning becomes a core infrastructure capability. Organizations that treat it as a first-class design constraint will be better positioned to scale safely, earn stakeholder trust, and translate technical innovation into durable real-world impact.

Discover more from SNAP TASTE

Subscribe to get the latest posts sent to your email.