At CES 2026 today, NVIDIA announced the Rubin platform, marking a decisive moment in the evolution of artificial intelligence infrastructure. The announcement reflects a broader reality facing enterprises, governments, and AI-native organizations alike: progress in AI is no longer constrained primarily by model architecture, but by the economics, reliability, and scalability of the systems that run those models.

As AI workloads evolve toward long-horizon reasoning, persistent memory, and autonomous agentic behavior, infrastructure efficiency becomes a first-order strategic variable. The Rubin platform is NVIDIA’s response to this inflection point. Its core premise is both technical and economic: AI adoption at scale requires system-level redesign, not incremental component upgrades.

Compared with the NVIDIA Blackwell platform, Rubin delivers up to a tenfold reduction in inference token cost and reduces by four times the number of GPUs required to train mixture-of-experts models. These gains fundamentally alter the cost curve of AI deployment, shifting advanced AI from a capital-intensive constraint to an operationally scalable capability.

From CES Showcase to System-Level Strategy

CES announcements often highlight individual breakthroughs. Rubin, by contrast, represents a system-level strategy. NVIDIA positions the platform as a unified AI supercomputer composed of six tightly integrated chips: the NVIDIA Vera CPU, NVIDIA Rubin GPU, NVIDIA NVLink 6 switch, NVIDIA ConnectX 9 SuperNIC, NVIDIA BlueField 4 data processing unit, and NVIDIA Spectrum 6 Ethernet switch.

This extreme hardware and software codesign enables coordinated optimization across compute, networking, storage, and security. The result is not simply higher peak performance, but improved operational resilience, lower time to deployment, and greater predictability in operating costs. For organizations scaling AI into production, these attributes are often more valuable than raw throughput.

The Rubin platform is designed to serve as a foundation for building, deploying, and securing the world’s largest AI systems at the lowest achievable marginal cost. In strategic terms, NVIDIA is signaling a shift from experimentation to industrialization of intelligence.

Scaling Reasoning, Not Just Parameters

Modern AI systems increasingly rely on multistep reasoning, long-context inference, and persistent memory. Agentic AI systems must retain state across interactions, coordinate with tools, and operate continuously rather than episodically. These requirements place stress on every layer of infrastructure.

Rubin addresses this challenge through five architectural innovations.

The sixth-generation NVIDIA NVLink delivers 3.6 terabytes per second of bandwidth per GPU, while the Vera Rubin NVL72 rack provides an aggregate 260 terabytes per second of bandwidth. This connectivity enables efficient scaling of massive mixture-of-experts models while reducing synchronization overhead. Built-in in-network compute accelerates collective operations, and enhanced serviceability features reduce downtime in large deployments.

The NVIDIA Vera CPU is designed specifically for agentic reasoning workloads. Built with 88 custom Olympus cores, full Arm v9.2 compatibility, and NVLink C2C connectivity, Vera emphasizes power efficiency alongside performance. As CPUs increasingly orchestrate inference pipelines and memory coordination, this balance becomes strategically important.

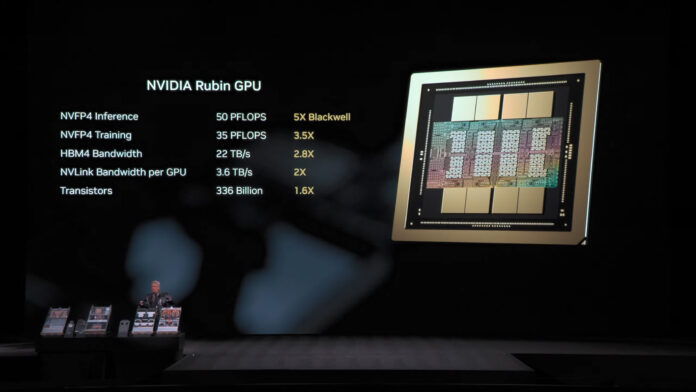

The NVIDIA Rubin GPU introduces a third-generation Transformer Engine with hardware-accelerated adaptive compression, delivering up to 50 petaflops of NVFP4 compute for inference. This capability underpins Rubin’s substantial reductions in inference cost per token.

Third-generation NVIDIA Confidential Computing extends data protection across CPU, GPU, and NVLink domains, enabling secure operation of proprietary models and sensitive workloads at rack scale. This capability is particularly relevant for enterprise, government, and regulated environments.

Finally, the second-generation reliability, availability, and serviceability engine introduces real-time health monitoring, fault tolerance, and proactive maintenance. A modular, cable-free tray design enables assembly and servicing up to eighteen times faster than prior-generation systems, reducing operational friction at scale.

Reframing Storage as a First-Class AI Constraint

As models scale and reasoning chains lengthen, storage has emerged as a limiting factor in AI systems. Long-context inference relies on large key-value caches that cannot remain indefinitely in GPU memory without degrading performance. Traditional storage architectures are not designed for this access pattern.

Announced alongside Rubin at CES 2026, the NVIDIA Inference Context Memory Storage Platform addresses this challenge directly. Powered by the NVIDIA BlueField 4 data processor, the platform treats storage as an extension of inference memory rather than a passive repository.

It enables high-bandwidth sharing of key-value cache data across clusters of rack-scale systems, boosting tokens per second by up to five times while delivering up to five times greater power efficiency than traditional storage approaches. This architecture supports persistent, multi-turn agentic reasoning without imposing GPU memory bottlenecks.

BlueField 4 also introduces Advanced Secure Trusted Resource Architecture, providing a unified control point for provisioning, isolating, and operating large-scale AI environments. As AI factories adopt bare-metal and multi-tenant deployment models, this capability becomes essential for maintaining governance without sacrificing performance.

Networking as a Force Multiplier

Networking increasingly determines whether AI systems scale smoothly or fragment under load. Rubin’s networking architecture reflects this reality.

NVIDIA Spectrum 6 Ethernet introduces AI-optimized fabrics designed for scale, resilience, and energy efficiency. Spectrum-X Ethernet Photonics switch systems deliver five times better power efficiency and significantly improved uptime through co-packaged optics. Spectrum-XGS Ethernet further enables facilities separated by hundreds of kilometers to operate as a single logical AI environment.

These capabilities are foundational for the next generation of AI factories, including environments that scale to hundreds of thousands of GPUs and beyond.

Deployment Models and Ecosystem Alignment

Rubin is delivered in multiple configurations to support diverse workloads. The Vera Rubin NVL72 rack integrates 72 Rubin GPUs and 36 Vera CPUs into a unified, secure system optimized for large-scale reasoning and inference. The HGX Rubin NVL8 platform supports x86-based generative AI and high-performance computing workloads. NVIDIA DGX SuperPOD serves as a reference architecture for deploying Rubin systems at scale.

NVIDIA confirmed at CES 2026 that Rubin is in full production, with partner systems expected to be available in the second half of 2026. Major cloud providers plan to deploy Rubin-based instances beginning in 2026. Microsoft will integrate Vera Rubin NVL72 systems into next-generation AI data centers, including Fairwater AI superfactory sites. CoreWeave will integrate Rubin into its AI cloud platform, operating it through CoreWeave Mission Control to maintain flexibility and production reliability.

NVIDIA also announced an expanded collaboration with Red Hat to deliver a complete AI software stack optimized for Rubin, including Red Hat Enterprise Linux, Red Hat OpenShift, and Red Hat AI. This alignment underscores the importance of pairing infrastructure innovation with enterprise-grade software adoption.

Strategic Takeaway

The Rubin announcement at CES 2026 signals a shift in how AI advantage is created. The defining variable is no longer simply model size or algorithmic novelty, but the ability to scale reasoning economically, securely, and reliably.

For engineering leaders, Rubin provides a blueprint for designing AI systems as integrated, resilient platforms rather than collections of optimized parts. For executives, it reframes AI infrastructure as a strategic asset that determines speed, cost structure, and competitive durability.

In this sense, Rubin is less a product launch than an architectural declaration. The future of AI belongs to organizations that can industrialize intelligence, not merely experiment with it.

Discover more from SNAP TASTE

Subscribe to get the latest posts sent to your email.