At CES 2026, AMD opened the show with a keynote that made one thing clear: the company sees AI not as a feature layered on top of computing, but as the next phase of the computing stack itself. From massive data center infrastructure to personal PCs, embedded systems, and classrooms, AMD used the stage to explain how its hardware, software, and partnerships are starting to turn AI from a promise into something people can actually use.

Chair and CEO Lisa Su framed the moment as a transition point. AI adoption is accelerating quickly, and the demands it places on computing are growing just as fast. Training larger models, running inference at scale, and bringing AI closer to users all require new approaches to performance, efficiency, and system design. AMD’s answer is an end to end portfolio that stretches from the largest systems imaginable down to the devices people interact with every day.

A look at what yotta scale computing actually means

At the top of the stack, AMD provided an early look at Helios, its rack scale platform designed as a blueprint for what the company calls yotta scale AI infrastructure. The idea is rooted in scale. Global compute capacity today sits around 100 zettaflops, but AMD expects that number to climb beyond 10 yottaflops within the next five years as AI training and inference workloads continue to explode.

Getting there is not just about building faster chips. AMD argues it requires open, modular system designs that can evolve over time, scale efficiently, and connect thousands of accelerators into a single, unified system. Helios is built around that philosophy. In a single rack, it is designed to deliver up to three AI exaflops of performance, with the bandwidth and energy efficiency needed to train trillion parameter models.

The platform brings together Instinct MI455X GPUs, EPYC “Venice” CPUs, and Pensando “Vulcano” networking, all tied together through the ROCm software ecosystem. The emphasis on openness is intentional. AMD wants Helios to be a foundation that partners can build on rather than a closed system locked to a single generation of hardware.

Alongside Helios, AMD expanded its data center AI roadmap. The company introduced the Instinct MI440X GPU, a new member of the MI400 Series designed specifically for on premises enterprise AI deployments. Unlike massive hyperscale focused accelerators, the MI440X is meant to fit into existing data center environments, supporting training, fine tuning, and inference in a compact eight GPU configuration.

The MI440X complements the MI430X GPUs announced earlier, which target hybrid AI and high precision scientific workloads. Those chips are already slated for large scale systems like the Discovery supercomputer at Oak Ridge National Laboratory and France’s Alice Recoque exascale system.

Looking ahead, AMD also previewed the next generation Instinct MI500 Series GPUs, planned for launch in 2027. Built on the upcoming CDNA 6 architecture, a 2nm manufacturing process, and HBM4E memory, the MI500 Series is expected to deliver dramatic gains in AI performance compared to today’s accelerators. While exact real world numbers will take time to materialize, the roadmap signals how aggressively AMD expects AI workloads to grow.

Bringing AI closer to where people actually use it

While the data center story sets the ceiling, much of AMD’s keynote focused on where AI will matter most to everyday users: PCs and edge devices.

AMD introduced new Ryzen AI platforms designed to make AI a native part of the PC experience. The Ryzen AI 400 Series and Ryzen AI PRO 400 Series deliver up to 60 TOPS of NPU performance, enough to comfortably handle local AI tasks while still supporting cloud connected workflows. Full ROCm support means developers can move workloads more easily between servers and client devices without rethinking their software stack.

The first systems based on these platforms are expected to ship in January 2026, with broader OEM availability following in the first quarter. AMD’s goal is straightforward. AI features should feel responsive, efficient, and always available, not gated behind constant cloud access.

AMD also expanded its high end on device AI offerings with new Ryzen AI Max+ processors. With support for up to 128GB of unified memory, these chips can run models as large as 128 billion parameters locally. That capability opens the door to advanced inference, creative workflows, and even high end gaming in thin and light laptops and small form factor desktops without relying on discrete GPUs.

For developers who want a dedicated local AI system, AMD introduced the Ryzen AI Halo developer platform. Halo is a compact desktop PC built around Ryzen AI Max+ processors and designed to deliver strong performance per dollar for AI workloads. The focus here is practicality. Halo is meant to be easy to deploy, easy to develop on, and powerful enough to meaningfully reduce reliance on cloud resources. AMD expects it to be available in the second quarter of 2026.

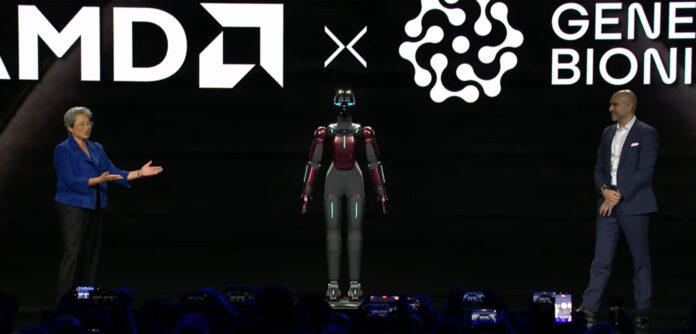

AI is also moving beyond traditional PCs, and AMD addressed that shift with the introduction of Ryzen AI Embedded processors. The new P100 and X100 Series target edge use cases like automotive digital cockpits, smart healthcare devices, autonomous systems, and humanoid robotics. These processors are designed for environments where power efficiency and reliability matter as much as raw performance, bringing AI closer to the physical world where decisions often need to happen in real time.

Partnerships, policy, and the people building what comes next

Throughout the keynote, AMD highlighted partnerships across research, healthcare, aerospace, and creative industries, including OpenAI, Luma AI, Liquid AI, World Labs, Blue Origin, AstraZeneca, Absci, and Illumina. Each example reinforced the same idea: AI progress depends on collaboration across hardware, software, and real world domains.

That broader view extended into public policy and education. Lisa Su was joined on stage by Michael Kratsios, Director of the White House Office of Science and Technology Policy, to discuss the Genesis Mission, a public private initiative aimed at strengthening U.S. leadership in AI. As part of that effort, AMD is powering new AI supercomputers at Oak Ridge National Laboratory and working with partners to expand access to AI education.

AMD also announced a $150 million commitment to bring AI into more classrooms and communities, supporting hands on learning and early exposure to the technology. The keynote closed by recognizing more than 15,000 students who participated in the AMD AI Robotics Hackathon with Hack Club, a reminder that the next phase of AI will be shaped not just by hardware roadmaps, but by who gets the chance to build with them.

Taken together, AMD’s CES 2026 keynote offered a grounded but optimistic view of where AI is headed. The company is betting that the future of AI computing will be defined by scale, openness, and proximity to users. Whether that vision plays out exactly as planned remains to be seen, but AMD is clearly positioning itself to be part of every layer of that future, from the largest data centers to the devices and people shaping what comes next.

Discover more from SNAP TASTE

Subscribe to get the latest posts sent to your email.